Deep Learning in Image Recognition: Techniques and Challenges

In the vast realm of artificial intelligence, deep learning has emerged as a game-changer, especially in the field of image recognition.

Join the DZone community and get the full member experience.

Join For FreeIn the vast realm of artificial intelligence, deep learning has emerged as a game-changer, especially in the field of image recognition. The ability of machines to recognize and categorize images, much like the human brain, has opened up a plethora of opportunities and challenges. Let's delve into the techniques deep learning offers for image recognition and the hurdles that come with them.

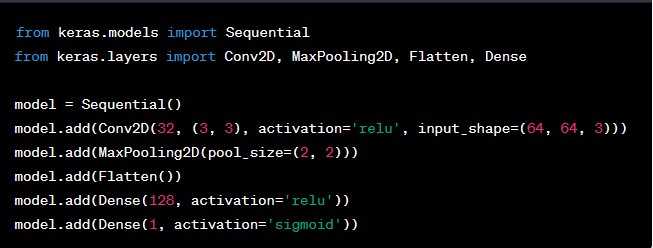

Convolutional Neural Networks (CNNs)

Technique: CNNs are the backbone of most modern image recognition systems. They consist of multiple layers of small neuron collections that process portions of the input image, called receptive fields. The results from these collections are then tiled so that they overlap, to obtain a better representation of the original image; this is a distinctive feature of CNNs.

Challenges: While CNNs are powerful, they require a significant amount of labeled data to train. Overfitting, where the model performs exceptionally well on training data but poorly on new data, can also be a concern. Additionally, CNNs can sometimes be "fooled" by adversarial attacks, where slight modifications to an image can lead the model to misclassify it.

Transfer Learning

Technique: Transfer learning is a technique where a pre-trained model, usually trained on a vast dataset, is used as a starting point. The idea is to leverage the knowledge gained while solving one problem and apply it to a different but related problem.

Challenges: One of the main challenges with transfer learning is the difference in data distribution between the source and target tasks. If the tasks are too different, the performance might not be optimal. Also, there's a risk of negative transfer where transferring might hurt the performance.

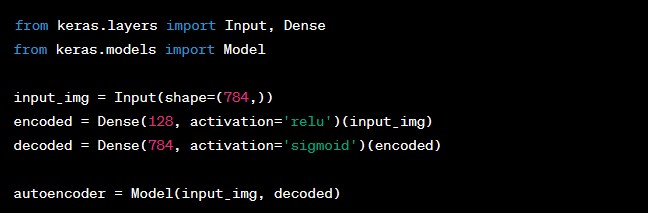

Autoencoders

Technique: Autoencoders are neural networks used to reproduce the input data after compressing it into a code. They can be used for image denoising and dimensionality reduction, which can be particularly useful in image recognition tasks.

Challenges: The main challenge with autoencoders is the potential loss of information during the encoding process. If not designed correctly, they might not capture the essential features of the data.

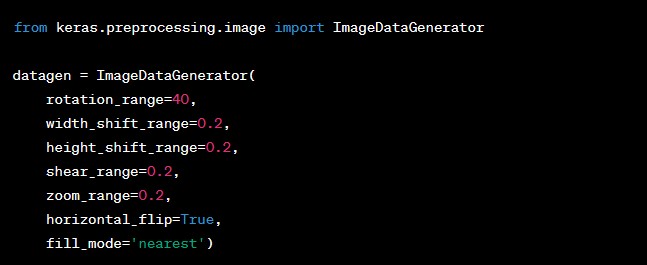

Data Augmentation

Technique: Data augmentation involves creating new training samples by applying various transformations to the existing data. For images, this could mean rotations, zooming, flipping, or cropping.

Challenges: While data augmentation can help improve model performance by providing more diverse training data, it's not a silver bullet. Over-augmentation can lead to models that generalize poorly to new, real-world data.

Generative Adversarial Networks (GANs)

Technique: GANs consist of two networks: a generator, which creates images, and a discriminator, which evaluates them. They can be used to generate new, synthetic instances of data that can augment a training set.

Challenges: GANs are notoriously hard to train. They require a careful balance between the generator and discriminator, and if one overpowers the other, the network can fail to converge.

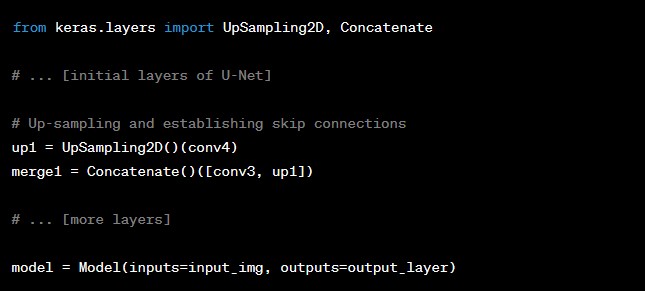

Image Segmentation Using U-Net

Technique: U-Net is a convolutional neural network that's especially good for biomedical image segmentation. It has an encoder path, a decoder path, and skip connections between them.

Challenges: U-Nets can sometimes produce segmentations that are overly smooth and might not capture all the intricate details of complex images.

Conclusion

Deep learning has revolutionized image recognition, offering techniques that can mimic and sometimes even surpass human capabilities. However, with great power come great challenges. As we continue to push the boundaries of what's possible with image recognition, it's essential to be aware of these challenges and work towards addressing them. The future of image recognition, powered by deep learning, is bright, but it requires continuous learning and adaptation.

Opinions expressed by DZone contributors are their own.

Comments