Identity and Access Management Solution to Safeguard LLMs

One of the essential security measures to address LLM security involves securing access to the LLMs so that only authorized individuals can access data.

Join the DZone community and get the full member experience.

Join For FreeIn the era of artificial intelligence, the use of large language models (LLMs) is increasing rapidly. These models offer amazing opportunities but also introduce new privacy and security challenges. One of the essential security measures to address these challenges involves securing access to the LLMs so that only authorized individuals have access to data and permissions to perform any action. This can be achieved using identity and access management (IAM).

Identity and access management act as a security guard for critical data and systems. This approach operates just like a physical security guard who controls who can enter a building and who has access to security camera footage. When entering a building, security guards can ask for your identity, where you live, etc. They can also keep an eye on your activity around or inside the building if they see something suspicious. Similarly, identity and access management ensure that only authorized individuals can enter and access large language models. IAM also keeps a log of user activities to identify suspicious behavior.

Is IAM the solution to large language model security problems? In this article, we will explore the role of IAM in securing LLMs, but let’s first understand what LLMs are and their uses.

Language Modeling

Learning a new language is not an easy task. Humans have to learn the vocabulary, grammatical rules, and expressions in order to fully learn a language. For machines, however, learning a new language is not that hard, given that the machines are provided with a large amount of correct text data. From this data, a machine can learn the grammatical rules of a language as well as vocabulary and expression.

Teaching a machine a language is known as language modeling. There are two main types of language modeling:

- Statistical language models. These models are trained on probability and statistics. In a large data set, they observe the pattern of words and how they appear to make predictions.

- Neural language models. These models use artificial neural links (like the human brain) to learn a new language. They do not just learn the words and patterns, but also the expressions and the meaning of the overall sentence.

The neural language model can also be subdivided into multiple other categories, one of which is called transformer-based or simply transformer models. These models are very good at understanding human language as they do not process the information word by word but use parallel processing, which makes it fast. As they process sentences, the systems can understand expressions and use parallel processing. They are best used for machine translation, understanding queries, and generating responses.

What Is a Large Language Model?

The LLM falls under the transformer-based neural language model. An LLM is a type of AI that is trained on a large data set so it can understand human languages (natural language processing) and generate responses based on the queries (question and answering). Nowadays, people are using large language models not only for research purposes but also for their daily tasks. The best-known example of an LLM is the chat generative pre-trained transformer (ChatGPT).

How Does a Large Language Model Work?

Data from sources such as books, articles, and research papers are collected into a large data set. These datasets are then processed into fine data. LLMs train on these datasets and learn human interactions by observing the grammar, meanings, and more.

Once the model is trained, it is ready to embed as a service to process user queries and generate appropriate responses. These user interactions generate more data that is used to train the model further for a better and enhanced language model.

LLM Security

LLMs work differently than other products or services and thus face different security challenges. Let's discuss some of the security risks large language models face:

- Command injection. This is also referred to as prompt hacking or input manipulation. As LLMs are trained on large data sets that also contain sensitive information, attackers can manipulate the input to obtain sensitive information or gain unauthorized access.

- Data corruption. Because LLMs train on datasets, if an attacker corrupts the data in these data sets, they can cause the model to generate inaccurate responses for user inputs.

- Service outage. An attacker can make the service unavailable or slow for other users by submitting an input that is so complex that it uses most of the model’s resources. This can result in a bad user experience for legitimate users. This is also called denial of service, as the language model will deny serving actual users.

- Vulnerable plugin structure. LLMs use third-party plugins that make them vulnerable to risks such as data breaches or malicious code run remotely via these plugins.

- Intellectual property theft. When someone gains unauthorized access to the proprietary information of an LLM, they can steal the datasets as well as new user inputs and algorithms. They can then use these to create their own model, which they can use to gain access to other sensitive information.

These are a few of the key security risks large language models face. Now, let’s discuss how IAM can be used to mitigate these risks.

The Role of Identity and Access Management in Securing LLMs

Imagine your family has a lot of secret recipes that are written down in a diary. You only want your family members or close friends to access this diary, so you set a lock and make a unique key for each member. Now your recipes are safe, and only authenticated people with their unique keys can access that diary.

Identity and access management serves that same purpose for your digital data. It assigns an identity to trusted users and only allows access to those users. It allows the system to keep the data secure, just like your secret recipe diary.

Let’s better understand what identity and access management is in large language models:

- Identity management. This helps the system to build the identity of a person by creating an account, assigning a unique username and password, and adding other personal data so it can be tracked throughout the system. It can also delete accounts when needed.

- Access management. In every system, there are multiple types of users. Not all users have access to all the resources. Access management helps in verifying user identity as well as if the said user has access permission to the resource they are trying to access. This guarantees that only authorized users have access to sensitive information and can perform specific actions.

Securing LLMs

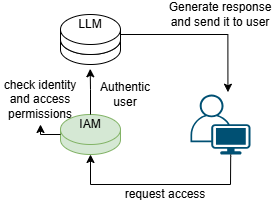

We discussed the various security risks faced by large language models. When identity IAM is implemented in the security strategy of a large language model, it helps in minimizing these security risks. These risks are addressed as follows:

- By identity check: When a user requests to use LLM, IAM checks if the user is authentic. It can use multiple methods to check this such as biometric or multi-factor authorization.

- By providing access control: When a user tries to access resources or perform an action, identity, and access management checks if that user has permission to access the resource or perform the specific action. IAM also assigns permissions to the users based on their roles. For example, an admin will have more access than a normal user or guest.

- Observe and uncover: Identity and access management records what a user accesses and when they access any resource. It then analyzes these records to uncover and report any suspicious activity.

Simple working of IAM Framework with LLM

- Deployment challenges. Deploying IAM in a system is not always easy. This is especially true for systems that do not have excess resources, as IAM can consume significant resources and can be time-consuming.

- User experience. User experience is vital for any user-based product. Identity and access management will add extra steps to identify the user, which can sometimes be aggravating for some users.

- Costly. Using IAM might be costly as it requires additional technology (software or hardware) and training. It also needs maintenance in order to work effectively, which can be time-consuming.

- Technical challenges. Users might need some time to get used to the new system of identification. Integration with an already working system might also be tricky.

Identity and access management can serve as a guard around large language models. IAM ensures that only authorized people can access and use these models. This and other security strategies are a must-have for the safe and responsible use of AI technologies. When implemented successfully, these strategies promote the safe and effective use of these innovative LLMs.

Opinions expressed by DZone contributors are their own.

Comments