Streaming Data Using Node.js

Learn how streaming can be useful to perform certain tasks using different programming languages. We will also see how it can be used in Node.js.

Join the DZone community and get the full member experience.

Join For FreexdStreaming is not a new concept. It has been a very common requirement in programming; however, it is normally under-appreciated and not discussed as frequently as it should. I was watching the movie “source code” (2011) then it suddenly clicked me and I was able to connect the dots. Although the movie was about time-stream which they were replaying to undo an accident in the past its not much different from the topic in this post.

I will try to keep the details simple and hopefully, after reading this post you will have a better understanding of it. Once you get the hang of streams, you will see their usage in many places and will be able to harness its power in your projects.

I am also assuming you have basic knowledge of Node.js and if you want, you can read my other articles on that topic. Let's start.

Input/Output (I/O)

- In computer science, Input/Output or I/O refers to the transfer of data from or to applications.

- There are various ways an application can perform I/O functions and one of those is Streams.

- Stream I/O: Data is represented as a stream of bytes. Where a stream can represent different kind of sources and/or destinations (e.g. files, network locations, memory arrays, etc.)

- Streaming is not specific to Node. It is a common concern among various programming environments. Let's talk about it in a general fashion and later we will see how Node addresses it.

Streaming Example

Before we continue with the theory and details behind streams, let's see a very simple example:

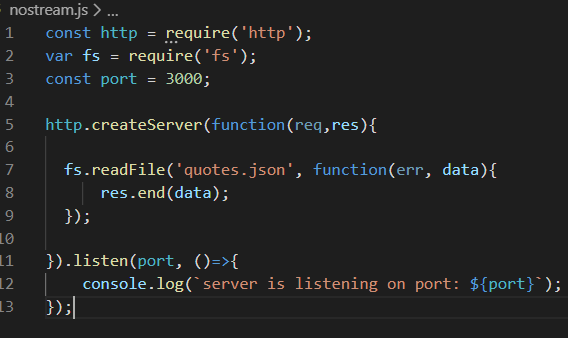

Scenario: You want to send a file from a web server to a client (browser) in an efficient manner that will scale up to large files.

Here we are using readFile function which is non-blocking but this will read the entire file into memory. This part can be easily improved by using streams. See example below:

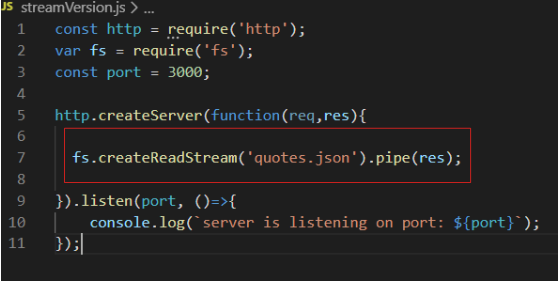

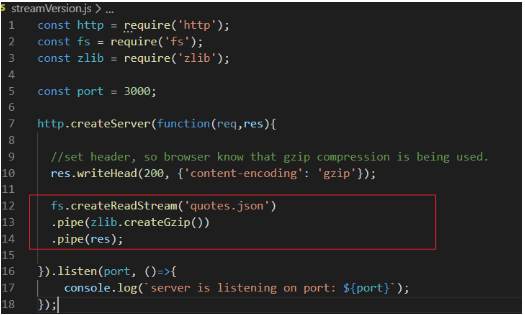

This example is more efficient. Now instead of reading the entire file into memory, a buffer’s worth will be read at a time and sent to the client. If the client is on a slow connection, the network stream will signal this by requesting that the I/O source pauses until the client is ready for more data. This is known as back-pressure. We can take this example one step further and see how easy it is to extend the stream. We can easily add compression. See Next example:

This can be expended in many ways. e.g. file can be first piped through HTML template engine and then compressed. Just remember the general pattern is readable.pipe(writable).

Data Streaming

- Let's take a step back and understand what is a stream. This part is not specific to node.js you can apply it generically and the concepts presented here are more and less same to .NET, Java, or any other programming language.

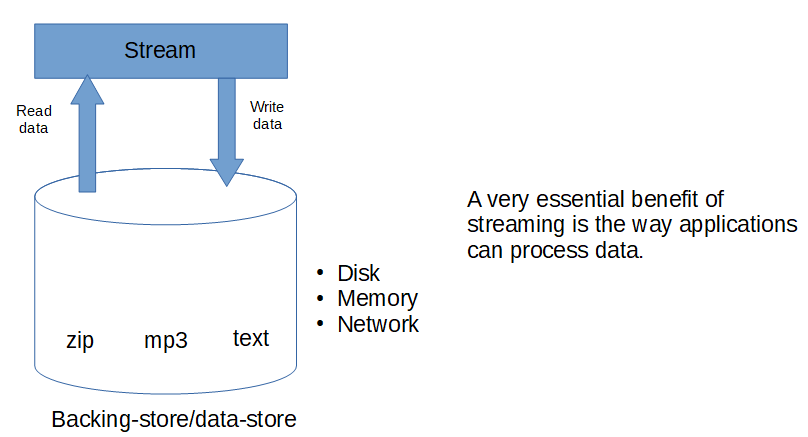

- A stream is a sequence of bytes that you can use to read from or write to a storage medium (aka backing store).

- This medium can be the file system on a disk, it can be a memory or it can be a network socket. So it's a storage mechanism holding some data. Now the nature of this data does not matter. It can be a zip file, an audio file, a text file, or any type of data.

- A stream then models this data regardless of its type as a set of bytes and gives the application the ability to read or write into these bytes.

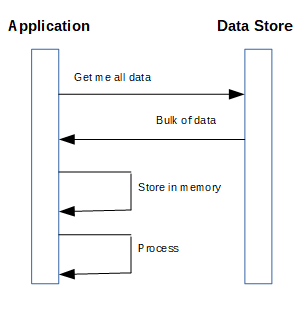

Non-Streaming Data Consumption

Let's see data consumption flow in a non-streaming fashion:

- Without a streaming approach, an application gets the data from a data source.

- Its only option is to get the bulk of data at once.

- The application will have to store this data in memory before being able to process it. This of course can have a huge negative impact on memory as the size of data grows. This would severely harm the scalability of your applications.

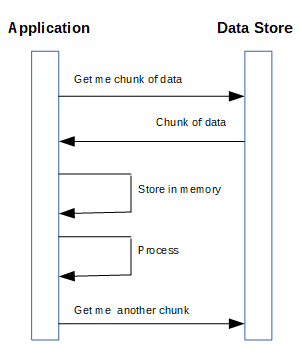

Stream-Based Data Consumption

Now see what a stream-based data consumption brings to the table:

- A very important benefit is this approach is that applications can consume data by chunks. What application get back is a portion representing a chunk of data.

- The size of this chunk is typically decided based on memory capacity and expected load.

- The important thing is that application is not required to load the entire data into memory. Instead, only that chunk is stored in memory and then processed by the application.

- The application would then ask of the net chunk whenever its ready to continue processing it. This continues until there are no remaining data chunks.

What About Memory-Based Streams

- There are also memory-based streams where data is coming from memory. So the question is, if data is already in memory, then how you take advantage of the memory saving characteristics of the stream? Well, in this case, the answer is simply you don’t.

- Memory-based streams already have data in memory, so there is no advantage here. This however does not mean memory-based streams are not used. There are still various usage scenarios, some of those we will see in later posts.

Streams in Node.js

Ok, now we have a general understanding of streams and its benefits, let's see structure and usage of streams in Node.js.

- In Node, streams are an abstract interface adhered to by several different objects. Streams can be readable or writable and are implemented with instances of EventEmitter. Streams provide the means of creating data flows between objects.

- EventEmitter is the basis for most of the Node’s core modules. The streams, networking, and file systems drive from it. You can inherit from EventEmitter to make your event-based API.

- Streams inherit from EventEmitter can be used to model data with unpredictable throughputs –like a network connection where data speeds can vary depending on what other users on the network are doing.

- Streams can be piped together, so you could have one stream class that reads data from the network and pipe it a stream that transforms data into something else. This could be data from an XML API that’s transformed into JSON, making it easier to work in JavaScript.

- You might think that events and streams sound abstract, and though that’s true, it is also interesting to note that they’re used as the basis for I/O modules like fs and net.

- Types of Streams

- Built-in: e.g fs.createReadStream

- HTTP: Technically network streams to work with various web technologies.

- RPC (Remote procedure call): Sending streams over the network is a useful way to implement inter-process communication.

- Protocols implemented with streams can be convenient when the composition is desired. Think about how easy it would be to add data compression to a network protocol if data could be passed through the gzip module with a single call to the pipe.

- Similarly, database libraries that stream data can handle large result set more efficiently; rather than collecting all results into an array, a single item at a time can be streamed.

- You can also find creative uses of streams online. e.g. baudio module used to generate audio streams.

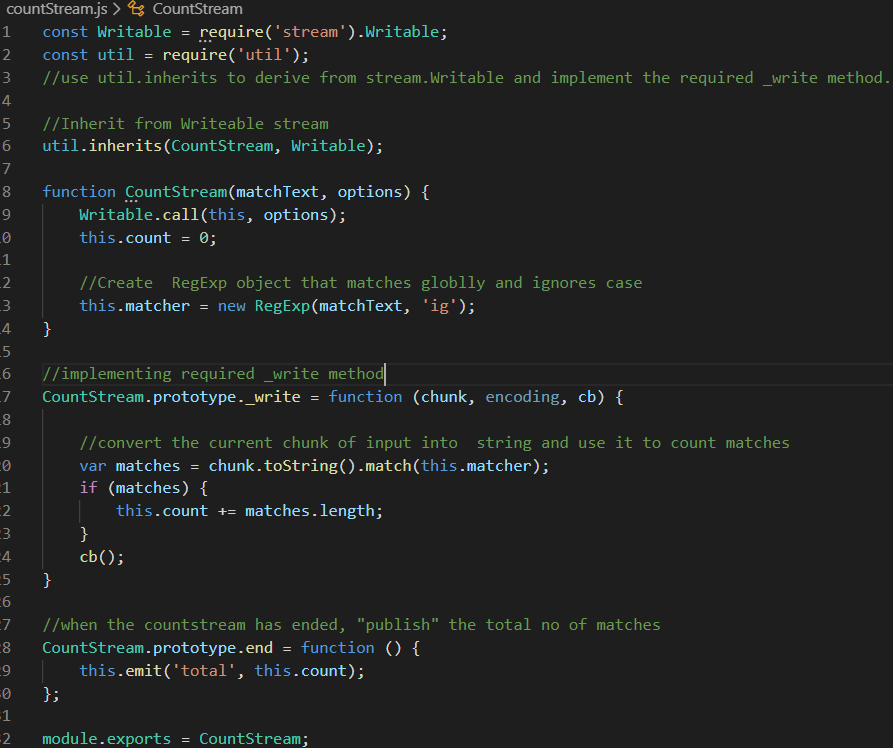

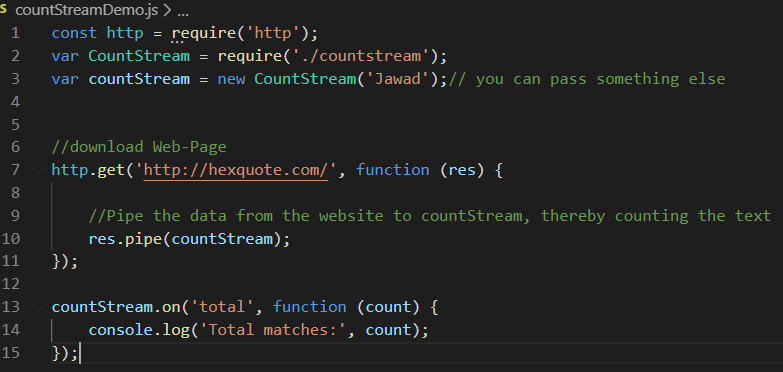

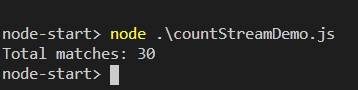

Stream Example: Word-Count

Let's see another example of streams. The code is self-explanatory and if something is not clear please ask in the comments. The example downloads a web-page and makes a count of a specific word.

- The significant thing here is the res.pipe method.

- When you pipe data, it doesn’t matter how big it is or if the network is slow. The CountStream class will dutifully count matches until the data has been processed.

- This node program does not download the entire file first! It takes the file –piece by piece – and process it. That’s the big thing here and a critical aspect of node development.

Summary

Streams are one of the underrated but powerful constructs of many programming languages. Almost all programming languages support this construct. Although I used Node.js for demos you can apply the same concepts to other languages. I will write another post to demo this in .NET and Java. Till next time, happy coding.

Published at DZone with permission of Jawad Hasan Shani. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments