What Is ELT?

Learn about ELT (Extract, Load, Transform), a modern data management approach that loads raw data into cloud systems for scalable, real-time transformation and analytics.

Join the DZone community and get the full member experience.

Join For FreeELT (“Extract, Load, Transform”) represents a paradigm shift in data management, emphasizing the power and scalability of modern data processing. By prioritizing the loading of raw data into powerful data processing systems before transformation, ELT enables organizations to harness their data more efficiently and with greater agility.

The Role of ELT in Modern Data Architecture

ELT plays a pivotal role in contemporary data strategies, aligning with a cloud-first approach and the need for real-time analytics. It supports the vast data volumes generated by today's businesses, providing a flexible framework that adapts to an ever-changing data landscape:

- Adaptability to data volume and variety: ELT thrives in data-centric environments, allowing for the storage and management of vast quantities of data — and accommodating for the rapid growth and diversity of enterprise data sources.

- Real-time data processing: By enabling on-the-fly data transformation within powerful cloud data warehouses, ELT facilitates real-time analytics, which is crucial for immediate business insights and actions.

- Cloud-native integration: Seamlessly integrating with cloud services, ELT simplifies data pipelines, fostering a cohesive data ecosystem that enhances analytics and machine learning capabilities.

- Cost-effective scalability: ELT's cloud-native nature offers scalable solutions that align with pay-as-you-go pricing models, reducing overhead and enabling cost savings on infrastructure.

Start Your Data Journey: Free Course "The Path to Insights: Data Models & Pipelines"

*Affiliate link. See Terms of Use.

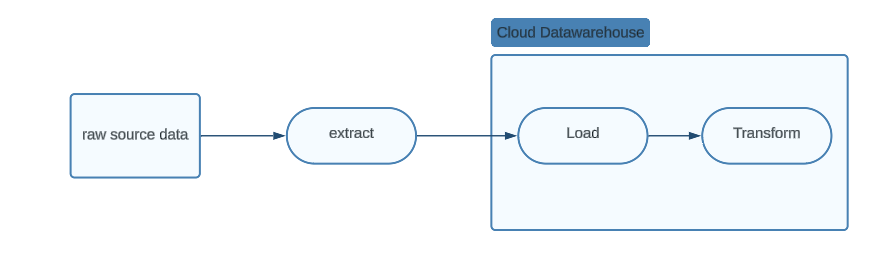

How Does ELT Work?

ELT reimagines the traditional data processing workflow by changing the order and environment in which data is transformed.

- Extract: Data is sourced from a multitude of origin points, from on-premise databases to cloud-based applications, ready to be ingested into a centralized processing system.

- Load: The raw data is loaded directly into a data processing engine, often a cloud-based data warehouse or data lake, where it is stored in its native format.

- Transform: Transformations are performed as needed, utilizing the powerful compute resources of the target system, allowing for complex analytics and manipulation at scale.

The ELT Structure

The architecture of ELT is meticulously crafted to manage and process data at scale, providing the agility and robust performance that modern enterprises require. It's an architecture that aligns with the shift towards more dynamic, cloud-based data handling and analytics.

Overview of a Typical ELT Architecture

At its core, ELT architecture is distinguished by a simplified and efficient workflow that strategically reduces unnecessary data movement. By capitalizing on the advanced computational capabilities of modern data storage solutions, ELT streamlines the path from data source to insights. This efficiency is particularly beneficial in cloud environments, where the elasticity of resources can be harnessed to handle fluctuating data workloads with ease.

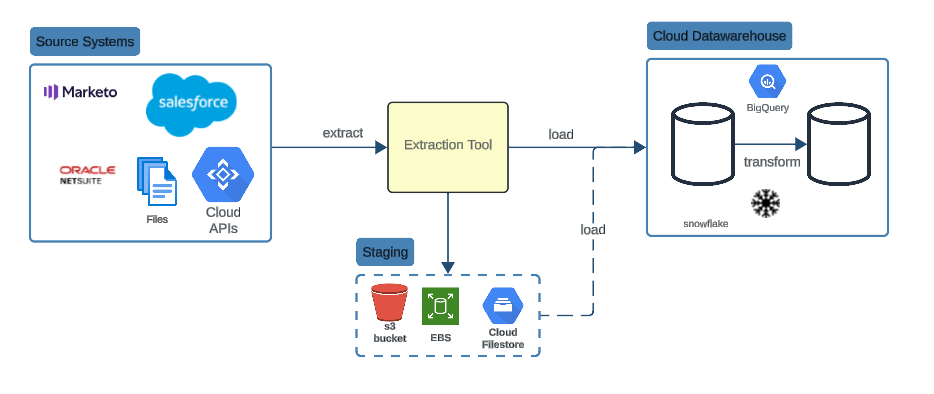

Components of a Typical ELT Architecture

- Source systems: The origin points for data, which can range from traditional databases to cloud applications.

- Staging area: An optional intermediary storage area where data can be held before loading, if necessary.

- Target systems: The central processing engines, such as cloud data warehouses, where data is loaded and transformed.

- Transformation logic: The set of rules and operations applied to data within the target system to prepare it for analysis.

ELT vs. ETL

While ELT and ETL share the same fundamental components, their approaches differ significantly in execution and capabilities. The evolution from ETL to ELT reflects a fundamental change in data processing, driven by the advent of cloud computing and the need for more agile data operations.

|

Transformation |

Transformation occurs within the data warehouse, leveraging its native features such as massive parallel processing and optimized storage. |

Transformation happens in a separate processing layer, often necessitating additional infrastructure and maintenance. |

|

Architecture |

The architecture of ELT is inherently simpler, with a focus on extracting and loading data before transforming it within the target system. This reduces the number of stages data must pass through, decreasing latency and potential points of failure. |

ETL's architecture, traditionally more segmented, can lead to increased data movement and longer processing times. |

|

Workflow |

ELT workflows are characterized by their adaptability and integration with cloud services, providing a seamless path from raw data to actionable insights. |

ETL workflows, historically linear and less flexible, may struggle to keep pace with the dynamic demands of real-time analytics and big data processing. |

|

Benefits |

ELT offers a streamlined approach that aligns with the scalability of cloud storage and modern data warehouses like Redshift and Snowflake. It allows for greater flexibility in data modeling and empowers analysts with SQL skills to participate in the data transformation process. |

ETL provides precise control over data transformations before the data reaches the warehouse, ensuring data quality and consistency. |

|

Challenges |

Raw data is loaded into the warehouse before transformation, which can expose sensitive information if not properly managed. While ELT simplifies the architecture, complex transformations can still be challenging and may require advanced SQL skills. |

While providing precise control over data before it reaches the warehouse, ETL can require significant engineering resources to manage custom scripts and complex transformations. |

In summary, ELT has become the preferred method for many organizations due to its alignment with current technology trends, cost efficiency, and data democratization. It allows for a more collaborative and iterative approach to data transformation, enabling businesses to respond swiftly to new data and insights.

Benefits of ELT

ELT transforms the landscape of data management, offering a multitude of benefits that extend beyond data processing efficiencies to empower teams and enhance business operations.

Code-Centric Data Transformation

Adopting ELT means embracing a code-centric approach to data transformation, which brings several distinct advantages:

- Adherence to software principles: Data teams can manage transformations with the same rigor as software development, using version control to track changes and maintain the integrity of data models.

- Automated workflows: Incorporating automated workflows such as CI/CD into the data transformation process enhances the consistency and speed of deploying data models.

Empowerment Through Accessibility

ELT empowers team members across the data spectrum by making data more accessible and the transformation process more transparent:

- Independence for data teams: Analysts and data scientists gain the ability to manage their own data pipelines, reducing reliance on specialized data engineering roles.

- Collaborative transparency: By making the data transformation process more visible, business users can better understand and trust the data, leading to improved cross-functional collaboration.

Scalable Data Handling

ELT is engineered to effortlessly scale with your organization, adeptly handling expanding data volumes and complex processing demands.

Reduced Operational Costs

ELT minimizes the need for complex, intermediary data processing stages, resulting in a leaner, more cost-effective data pipeline.

By integrating these benefits, ELT not only streamlines the technical aspects of data management but also fosters a culture of innovation and collaboration, positioning organizations to fully capitalize on their data assets.

Top Challenges and Considerations

While ELT offers numerous advantages, its implementation is not without challenges. Organizations must carefully address these hurdles to ensure a successful ELT strategy:

- Data quality assurance: Ensuring the accuracy and integrity of data within the ELT process is paramount. Common data quality issues include inconsistencies, duplicates, and incomplete data, which can lead to unreliable analytics and business decisions.

- Integration complexity: As data landscapes become increasingly complex with a variety of sources and formats, integrating these disparate data streams into a cohesive ELT process can be challenging. This complexity requires sophisticated mapping and transformation logic to ensure seamless data flow.

- Security and compliance: Protecting sensitive data and adhering to regulatory standards like GDPR and CCPA is a critical concern in ELT processes. The challenge lies in implementing robust security measures that safeguard data throughout the entire pipeline.

- Performance optimization: Maintaining high performance in the face of large data volumes and complex transformations is a significant challenge. Organizations must optimize their ELT processes to prevent bottlenecks and ensure timely data availability.

Solutions to Overcome ELT Challenges

To address the above ELT challenges, organizations can adopt a series of best practices and solutions:

- Robust data governance: Implementing a strong data governance framework ensures data quality and consistency. This includes establishing clear policies, roles, and responsibilities for data management.

- Comprehensive monitoring: Continuous monitoring of the ELT process allows for the early detection of issues, enabling prompt resolution. This includes tracking data flow, performance metrics, and error logs.

- Cloud-native services: Utilizing cloud-native services and tools can enhance the security and performance of ELT processes. These services often come with built-in scalability and compliance features.

- Performance tuning: Regularly reviewing and tuning the performance of the ELT pipeline helps maintain efficiency. This may involve optimizing queries, indexing strategies, and resource allocation.

By addressing these challenges with strategic solutions, organizations can fully leverage the power of ELT to drive data-driven decision-making and maintain a competitive edge in the market.

Use Cases and Applications

The versatility of ELT makes it a valuable asset across a wide range of scenarios, from streamlining data warehousing to powering sophisticated analytics.

Advanced Data Warehousing

ELT is instrumental in constructing next-generation data warehouses that are not only scalable but also capable of supporting complex queries and real-time analytics. This is particularly beneficial for businesses that require immediate insights from their data to inform strategic decisions.

Comprehensive Data Integration

With ELT, organizations can more easily consolidate data from a multitude of sources, including IoT devices, online transactions, and customer interactions, into a single, cohesive analytics platform. This integration is key to developing a 360-degree view of business operations and customer behavior.

Enhanced Analytics and Business Intelligence

ELT facilitates the execution of sophisticated analytical queries and the deployment of machine learning models, even on expansive datasets. Analysts and data scientists can iterate on data models more rapidly, accelerating the time from data to insight.

Data Democratization

By simplifying the data transformation process, ELT empowers a broader range of users within an organization to engage with data. This democratization of data fosters a culture of informed decision-making and encourages innovation across all levels of the business

By leveraging ELT in these use cases, organizations can not only improve their data management and analytics capabilities but also gain a competitive advantage by being more responsive to market trends and customer needs.

Common ELT Tools and Technologies

The ELT ecosystem is rich with tools that cater to different aspects of the process. Here's a brief overview of some notable ELT tools:

- Fivetran: Fivetran is a cloud-based service that simplifies the extraction and loading of data into data warehouses. While primarily focused on the extract and load components of ELT, Fivetran also offers limited transformation capabilities. It integrates seamlessly with modern data warehouses and can leverage dbt Core for transformations, making it a comprehensive solution for data teams looking to streamline their ELT workflows.

- Stitch by Talend: Stitch, a part of Talend, is a SaaS product offering a multitude of connectors for extracting data from various sources and loading it into data warehouses. While it doesn't provide transformation capabilities, its strength lies in its ease of use and the breadth of its connector ecosystem, making it a solid choice for organizations focusing on the extract and load stages of ELT.

- Airbyte: Airbyte is an open-source ELT tool that also offers a cloud service, enabling teams to create custom data extraction and loading pipelines. Its open-source nature allows for greater flexibility and customization, appealing to teams with specific needs or those looking to integrate with unique data sources.

- dbt (data build tool): dbt stands out as a transformation-centric tool within the ELT process. It enables data analysts and engineers to perform transformations directly in the data warehouse, applying software engineering best practices like version control, testing, and documentation to the data modeling process. dbt is available as both an open-source and a cloud-based product, making it accessible to a wide range of users and use cases.

Each of these tools plays a specific role within the ELT pipeline, and organizations may choose to use one or a combination of them depending on their data requirements, technical expertise, and the complexity of their data workflows.

ELT Best Practices

To fully harness the potential of ELT and ensure a smooth data management process, organizations should adhere to a set of best practices. These practices not only enhance the efficiency and reliability of the ELT process but also help maintain compliance with data regulations.

Prioritize Data Quality

From the outset, establish rigorous data quality checks and validation processes. This ensures that the data loaded into the system is accurate and trustworthy, which is crucial for reliable analytics.

Streamline Data Integration

Develop a well-organized approach to integrating data from various sources. This involves standardizing data formats, establishing clear naming conventions, and ensuring consistency across data sets.

Secure Data at Every Stage

Implement robust security protocols, including encryption, access controls, and regular audits, to protect data throughout the ELT pipeline. This is vital for maintaining customer trust and meeting compliance standards.

Optimize for Performance

Regularly monitor and tune the performance of your ELT processes to handle increasing data volumes efficiently. This includes optimizing queries, managing resource allocation, and leveraging the scalability of cloud resources.

Plan for Scalability

Design your ELT architecture with future growth in mind. Anticipate the need to handle more data sources, larger volumes of data, and more complex transformations.

By following these best practices, organizations can create a robust and scalable ELT environment that not only meets current data needs but is also prepared for the challenges of the future.

Bottom Line: ELT Process

The ELT process represents a modern approach to data management that aligns with the needs of agile, data-driven businesses. By embracing ELT, organizations can unlock the full potential of their data, driving insights and innovation. This approach leverages the scalability and performance of cloud-based data warehouses, enabling more flexible and efficient data processing.

With ELT, businesses can reduce infrastructure costs, empower data analysts, and seamlessly integrate with various cloud services, ultimately fostering a more dynamic and responsive data environment. However, it's important to be mindful of data consumption and optimize costs by carefully managing resource usage and leveraging cost-effective cloud solutions.

Opinions expressed by DZone contributors are their own.

Comments