Zero Trust for AI: Building Security from the Ground Up

Zero-trust AI takes a proactive approach to security, protecting your data, workflows, and systems to keep everything safe and reliable.

Join the DZone community and get the full member experience.

Join For FreeAs artificial intelligence (AI) continues to revolutionize industries, its role in critical applications continues to grow exponentially. With all this innovation comes a growing concern — how do we keep AI systems secure? Unlike traditional applications, AI deals with highly sensitive data, intricate models, and sprawling networks that don’t fit neatly within the walls of traditional security measures. Traditional security models, built on the assumption of trust within a defined network perimeter, are proving inadequate in protecting the highly distributed, dynamic, and sensitive nature of AI workflows. In the context of AI, where sensitive data, complex models, and distributed systems intersect, zero trust offers a proactive and holistic approach to security.

This article explores the need for zero trust in AI, the fundamental principles that direct its application, and practical methods to safeguard AI systems from the outset.

Why AI Needs Zero Trust

AI systems present unique security challenges:

- Data sensitivity: AI models are trained on vast datasets, which often include sensitive or proprietary information. A breach can lead to data leakage or intellectual property theft.

- Model vulnerabilities: AI models can be vulnerable to various risks, such as adversarial attacks, model poisoning, and inference attacks.

- Distributed ecosystem: AI workflows often span across cloud environments, edge devices, and APIs, increasing the attack surface.

- Dynamic nature: The constant evolution of AI models and dependencies demands adaptive security measures.

Given these challenges, implementing zero-trust principles ensures a proactive approach to securing AI systems.

Unique Security Needs of AI Systems

While the principles of zero trust — "never trust, always verify" — apply broadly across application types, implementing zero trust for AI systems presents unique challenges and requirements compared to more traditional applications like microservices. The differences arise due to the distinct nature of AI workflows, data sensitivity, and operational dynamics. Here are the key differences:

- Data Sensitivity and Lifecycle: AI systems heavily rely on sensitive datasets for training and inferencing. The data lifecycle in AI includes ingestion, storage, training, and deployment, each requiring meticulous protection.

- Model Vulnerabilities: AI models are susceptible to attacks like model poisoning, adversarial inputs, and inference attacks. Securing these assets requires a focus on model integrity and adversarial defenses.

- Distributed Ecosystem: AI workflows span across cloud, edge, and on-premises environments, making it harder to enforce a consistent zero-trust policy.

- Dynamic Workflows: AI systems are highly dynamic, with models being retrained, updated, and redeployed frequently. This creates a constantly changing attack surface.

- Auditability: Regulatory compliance for AI involves tracking data lineage, model decisions, and training provenance, adding another layer of security and transparency requirements to zero trust.

- Attack Vectors: AI introduces unique attack vectors such as poisoning datasets during training, manipulating input pipelines, and stealing model intellectual property.

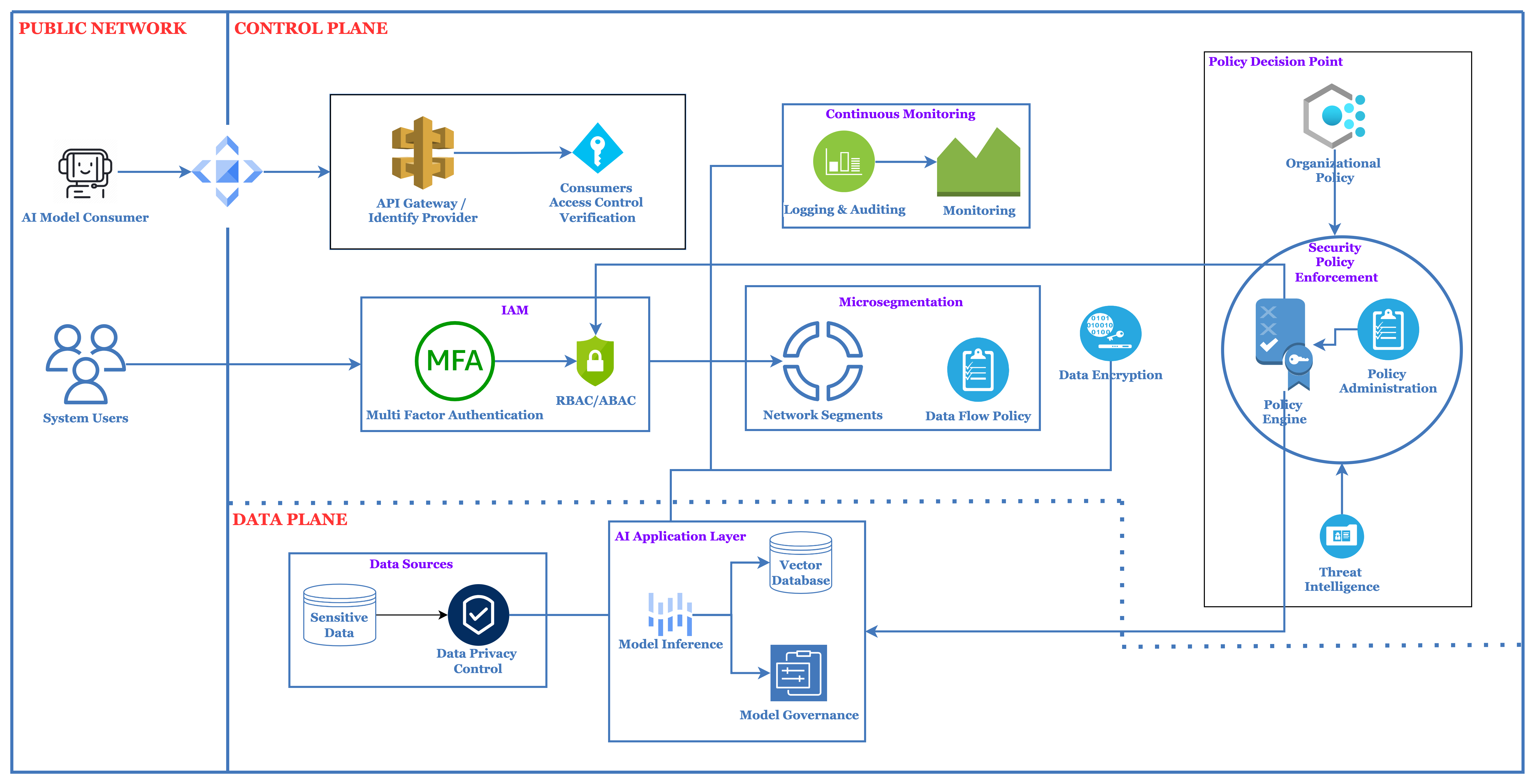

Core Principles of Zero Trust for AI Applications

Zero trust for AI applications is built on the following pillars:

1. Verify Identity at Every Access Point

- Implement multi-factor authentication (MFA) for users and machines accessing AI resources.

- Use role-based access control (RBAC) or attribute-based access control (ABAC) to restrict access to sensitive datasets and models.

2. Least Privilege Access

- Ensure users, applications, and devices have the minimum access required to perform their functions.

- Dynamically adjust permissions based on context, such as time, location, or behavior anomalies.

3. Continuous Monitoring and Validation

- Employ real-time monitoring of data flows, API usage, and model interactions.

- Use behavioral analytics to detect unusual activities, such as model exfiltration attempts.

4. Secure the Entire Lifecycle

- Encrypt data at rest, in transit, and during processing in AI pipelines.

- Validate and secure third-party datasets and pre-trained models before integration.

5. Micro-Segmentation

- Isolate components of the AI system (e.g., training environment, inference engine) to limit lateral movement in case of a breach.

Key Components of Zero Trust for AI Applications

1. Identity and Access Management (IAM)

Role

Ensures that only authenticated and authorized users, devices, and services can access AI resources.

Key Features

- Multi-factor authentication (MFA).

- Role-based access control (RBAC) or attribute-based access control (ABAC).

- Fine-grained permissions tailored to specific AI tasks (e.g., training, inference, or monitoring).

2. Data Security and Encryption

Role

Protects sensitive data used in training and inference from unauthorized access and tampering.

Key Features

- Encryption of data at rest, in transit, and during processing (e.g., homomorphic encryption for computations).

- Secure data masking and anonymization for sensitive datasets.

- Secure storage solutions for data lineage tracking and provenance.

3. Model Protection

Role

Safeguards AI models from theft, manipulation, and adversarial attacks.

Key Features

- Model encryption and digital signing to verify integrity.

- Adversarial training to make models resilient against crafted inputs.

- Access controls for model endpoints to prevent unauthorized use.

4. Endpoint and API Security

Role

Ensures secure communication between AI systems and their consumers or dependencies.

Key Features

- API authentication (e.g., OAuth 2.0, JWT) and authorization.

- API rate limiting and throttling to prevent abuse.

- Encryption of API communications using TLS.

5. Zero Trust Network Architecture (ZTNA)

Role

Implements micro-segmentation and strict network access controls to minimize attack surfaces.

Key Features

- Isolating AI environments (e.g., training, development, inference) to prevent lateral movement.

- Continuous monitoring of network traffic for anomalies.

- Network encryption and secure tunneling for hybrid or multi-cloud setups.

6. Continuous Monitoring and Analytics

Role

Detects and responds to potential threats or abnormal behavior in real time.

Key Features

- AI-driven threat detection systems to analyze behavioral patterns.

- Logging and auditing of all access and activity for forensic analysis.

- Anomaly detection to identify unusual data flows or model interactions.

7. Automation and Orchestration

Role

Streamlines security enforcement and incident response.

Key Features

- Automated remediation workflows for detected threats.

- Dynamic policy adjustments based on changing contexts (e.g., time, location, or behavior).

- Integration with Security Orchestration, Automation, and Response (SOAR) platforms.

8. Governance and Compliance

Role

Ensures adherence to regulatory requirements and organizational policies.

Key Features

- Detailed audit trails of data usage, model training, and inference processes.

- Compliance checks for AI-specific regulations like GDPR, CCPA, or AI ethics guidelines.

- Transparency mechanisms to validate AI decisions (e.g., explainability tools).

9. DevSecOps Integration

Role

Embeds security into the AI development and deployment lifecycle.

Key Features

- Secure CI/CD pipelines for AI workflows, ensuring vulnerabilities are caught early.

- Vulnerability scanning for third-party datasets, pre-trained models, and AI libraries.

- Security testing of AI models and their APIs during deployment.

10. Edge and IoT Security (if applicable)

Role

Protects AI models deployed on edge devices or IoT systems.

Key Features

- Device authentication and secure provisioning.

- End-to-end encryption between edge devices and central systems.

- Lightweight anomaly detection for resource-constrained edge environments.

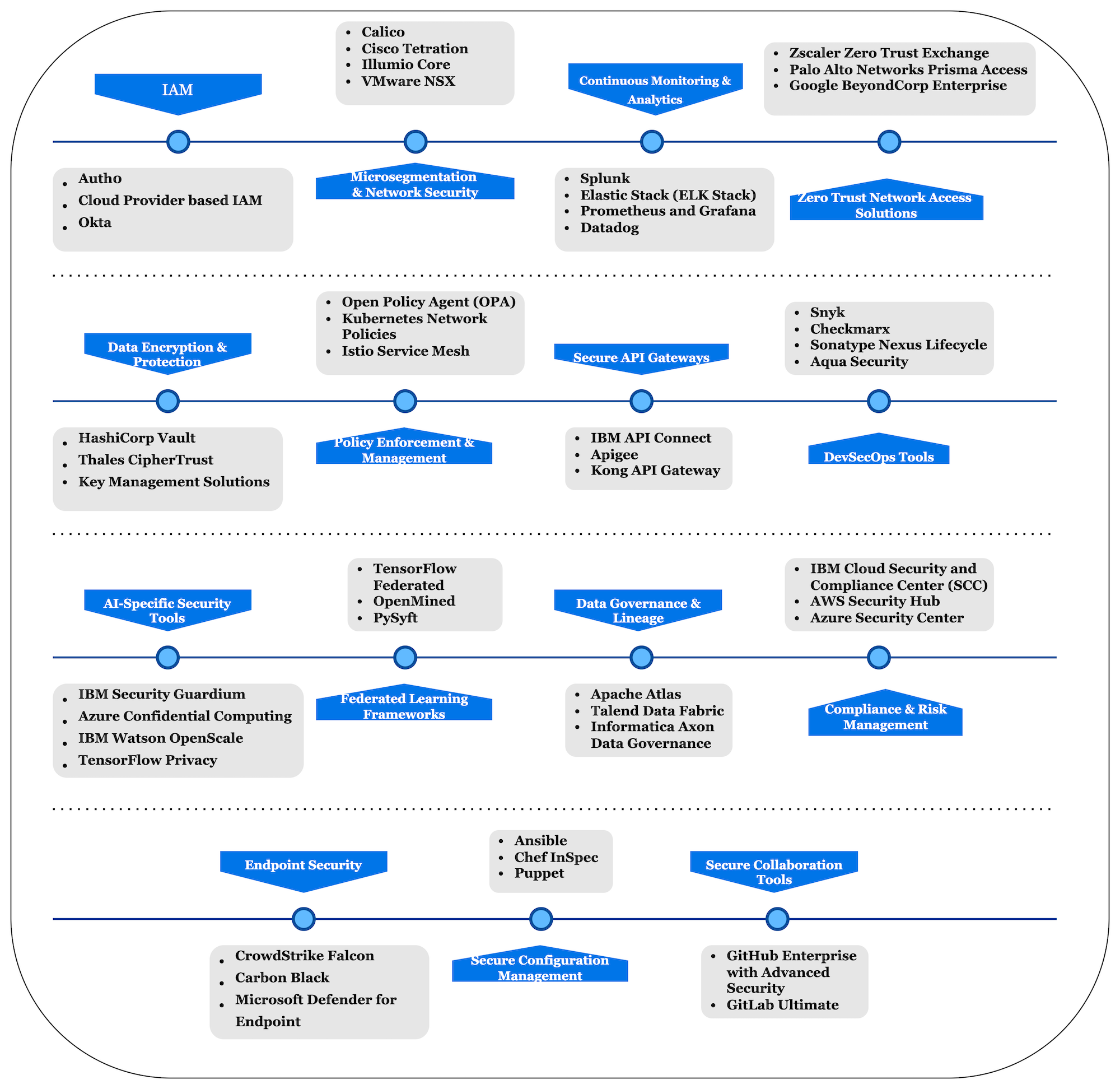

Tools and Frameworks for Zero Trust AI

The dynamic, distributed, and sensitive nature of AI applications introduces unique security challenges. Tools and frameworks specifically designed for zero trust in AI are essential for the following reasons:

- Protecting sensitive data: AI applications handle vast amounts of sensitive data, including personal and proprietary information. Tools for encryption and access control help safeguard data from breaches and misuse.

- Securing models against threats: AI models are susceptible to adversarial attacks, model theft, and data poisoning. Specialized tools can detect vulnerabilities, fortify models, and ensure their integrity during training and inference.

- Managing complex ecosystems: AI workflows span across cloud, edge, and hybrid environments, involving multiple stakeholders and systems. Frameworks for identity and access management, network segmentation, and monitoring ensure secure interactions across this distributed ecosystem.

- Compliance and transparency: With stringent regulatory requirements for AI (e.g., GDPR, CCPA), these tools help ensure compliance by enforcing audit trails, encryption, and explainability in AI systems.

- Enhancing resilience: By automating threat detection, continuous monitoring, and incident response, these tools make AI systems more resilient to sophisticated attacks.

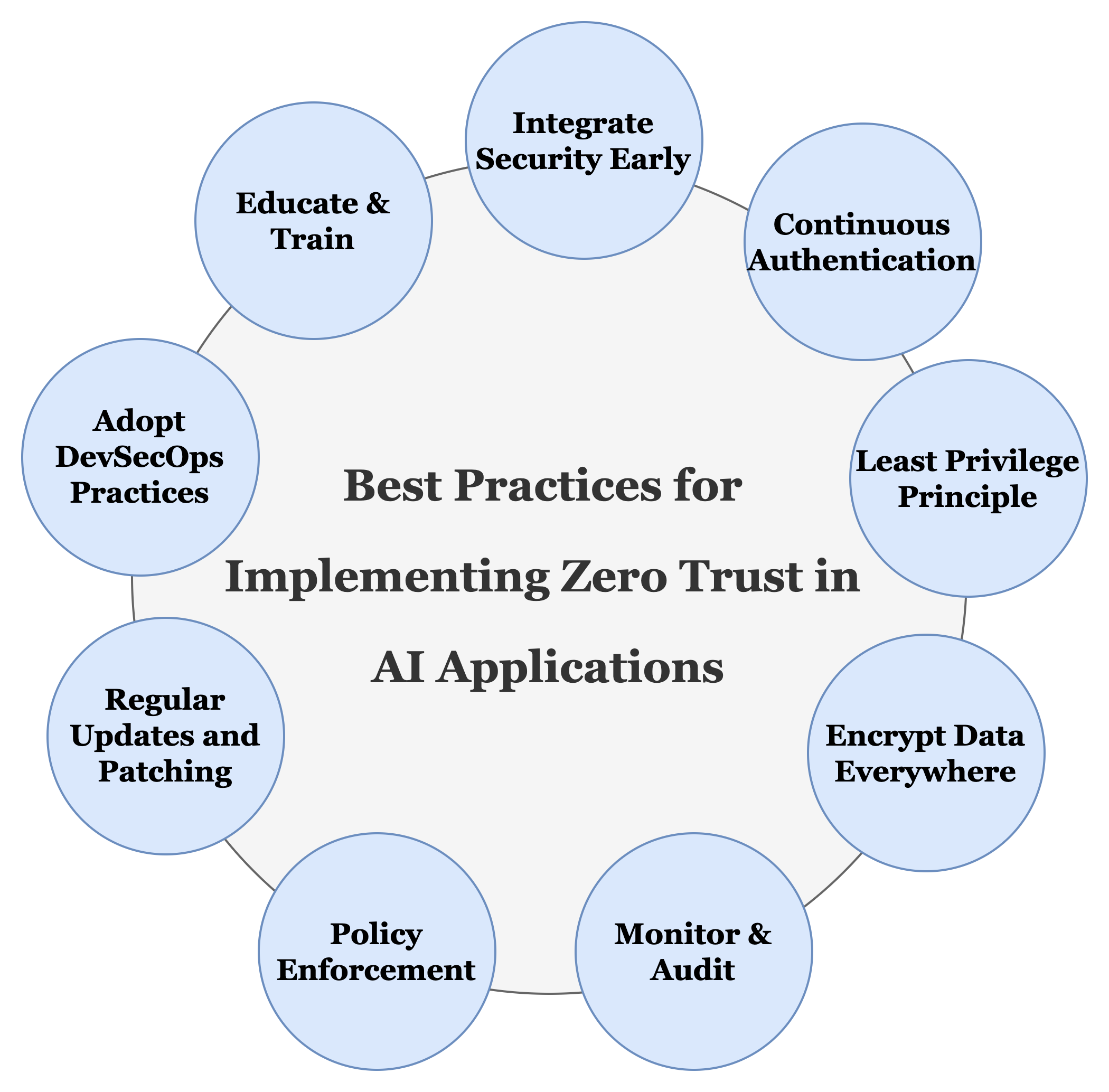

Best Practices for Zero Trust AI

Implementing zero trust for AI applications requires a proactive and comprehensive approach to secure every stage of the AI lifecycle.

Below are the best practices based on key security principles:

Integrate Security Early

- Embed security measures from the development phase to deployment and maintenance.

- Use threat modeling and security-first design principles to identify potential risks in AI workflows.

Continuous Authentication

- Enforce multi-factor authentication (MFA) for users and services accessing AI systems.

- Implement adaptive authentication methods that adjust security based on context (e.g., device, location, or behavior).

Apply the Least Privilege Principle

- Restrict access to the minimum level necessary for users and services to perform their tasks.

- Regularly review and update access controls to limit potential attack surfaces.

Encrypt Data Everywhere

- Ensure data encryption at rest, in transit, and during processing to protect sensitive AI training and inference data.

- Use advanced techniques like homomorphic encryption and secure enclaves for sensitive computations.

Monitor and Audit

- Deploy advanced monitoring tools to track anomalies in AI model behavior and data access patterns.

- Maintain comprehensive audit trails for data usage, model interactions, and API activities to detect and respond to suspicious activities.

Enforce Security Policies

- Use policy engines like Open Policy Agent (OPA) to enforce consistent security policies across microservices, data pipelines, and AI components.

- Define and automate policy enforcement to ensure compliance across environments.

Regular Updates and Patching

- Continuously update and patch all software components, including AI libraries, models, and dependencies, to mitigate vulnerabilities.

- Automate patch management in CI/CD pipelines to streamline the process.

Adopt DevSecOps Practices

- Integrate security testing, such as static application security testing (SAST) and dynamic application security testing (DAST), into the CI/CD pipeline.

- Use automated vulnerability scanning tools to identify and resolve issues early in the development process.

Educate and Train Teams

- Conduct regular training for developers, data scientists, and operations teams on zero-trust principles and the importance of security in AI systems.

- Foster a culture of shared responsibility for security across the organization.

By following these practices, organizations can establish a robust zero-trust framework that secures AI applications against evolving threats, reduces risks, and ensures compliance with regulatory standards.

Conclusion

As AI continues to shape our world, powering critical applications and driving innovation, it also brings unique security challenges that can’t be ignored. Sensitive data, distributed workflows, and the need to protect model integrity demand a proactive and comprehensive approach — and that’s where zero trust comes in. Zero trust offers a strong foundation for securing AI systems by focusing on principles like continuous authentication, least privilege access, and real-time monitoring. When paired with tools, best practices, and components like encrypted pipelines and model protection, it helps organizations to stay ahead of threats.

Opinions expressed by DZone contributors are their own.

Comments